Update #2.5

13 Sep 2018Experiment 1: Snapshots

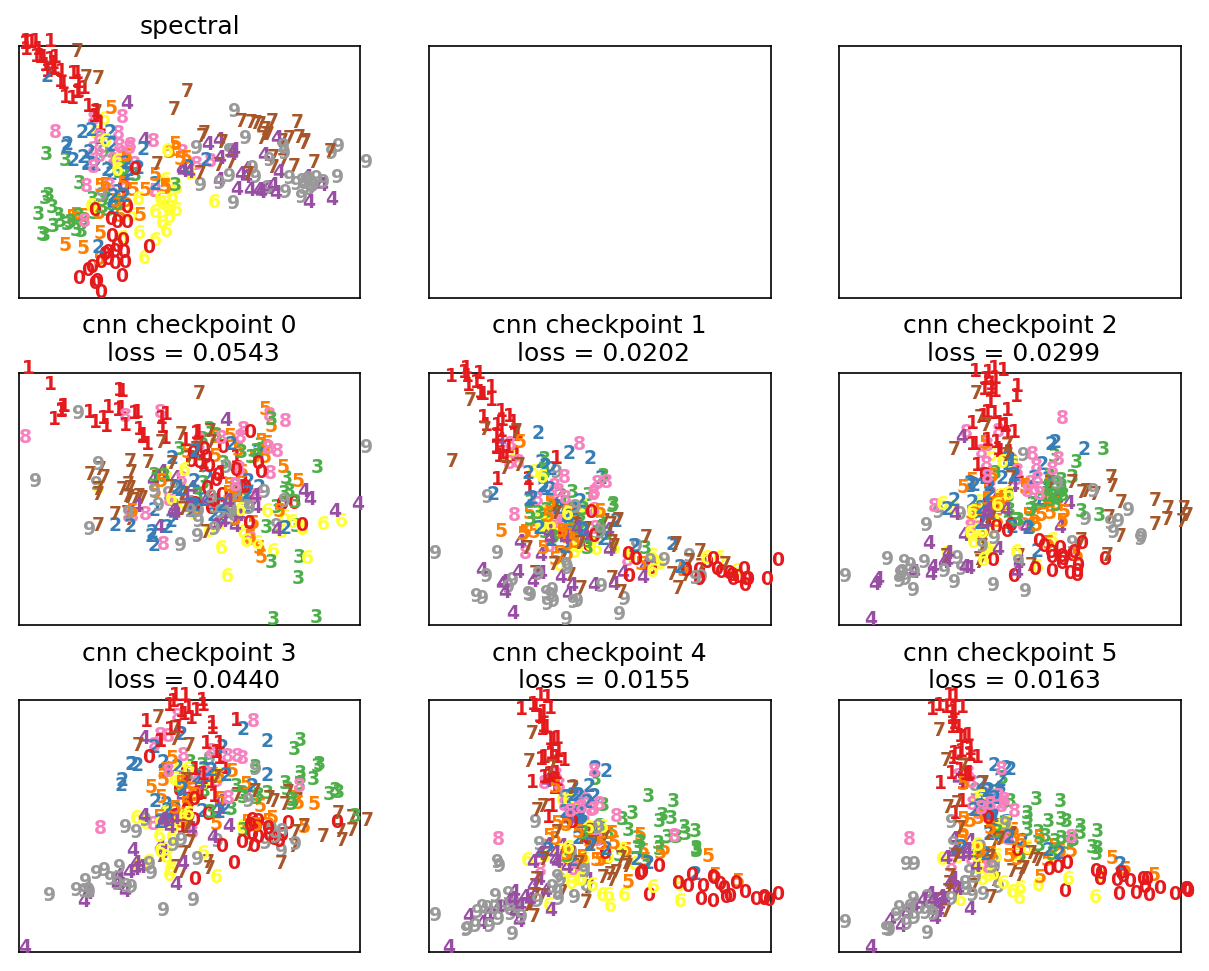

In the following experiment, I used a training set of 6,000 digits, with a mini batch size of 200. During the training phase, I save a checkpoint of the network after every 20 epochs, up to a total of 100 training epochs. Each checkpointed net is then used to predict an embedding matrix for a test set of 300 mnist digits. The corresponding pairwise loss is also computed.

Experiment 2: Similarity measure

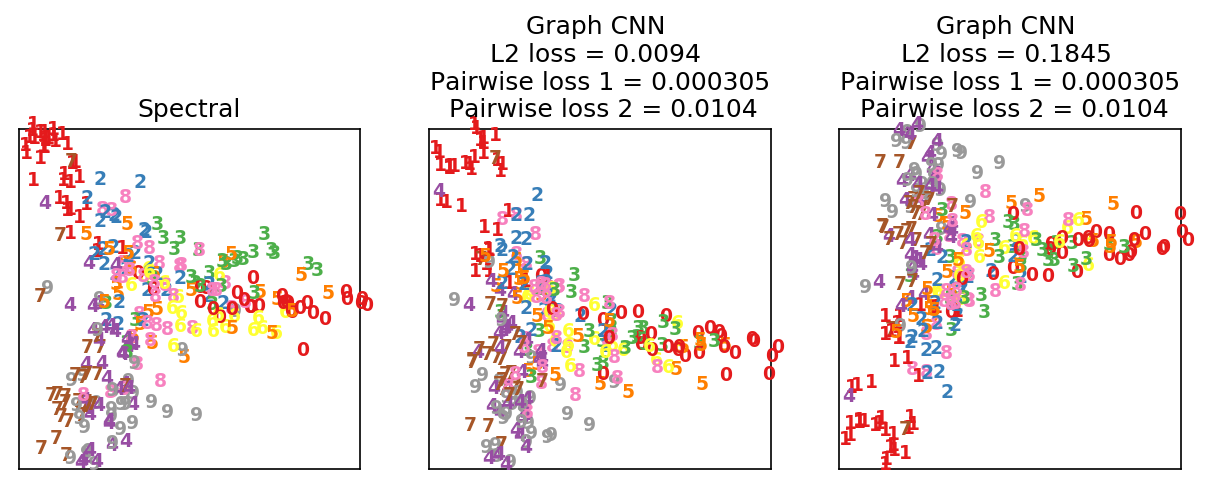

The orientation within the embedding manifold is arbitrary when performing dimensionality reduction. Hence, we need a similarity measure which is invariant under both rotations and reflections. In the following figure, we see two graph CNN outputs which are equivalent except for the orientation of points. The left output has a lower L2 loss, since its orientation matches the spectral output. However, the two CNN outputs have the same pairwise loss which is the property which we desire.

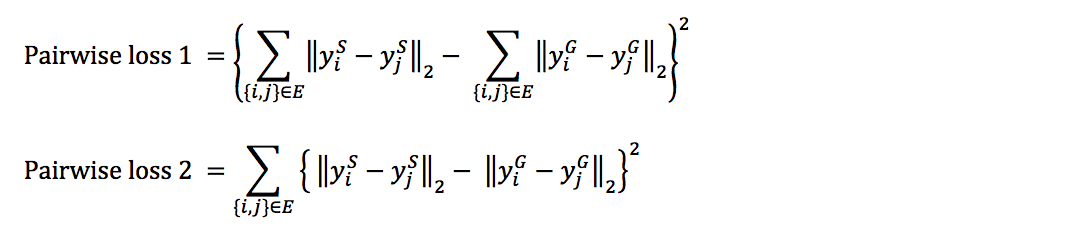

We define the pairwise loss functions as follows:

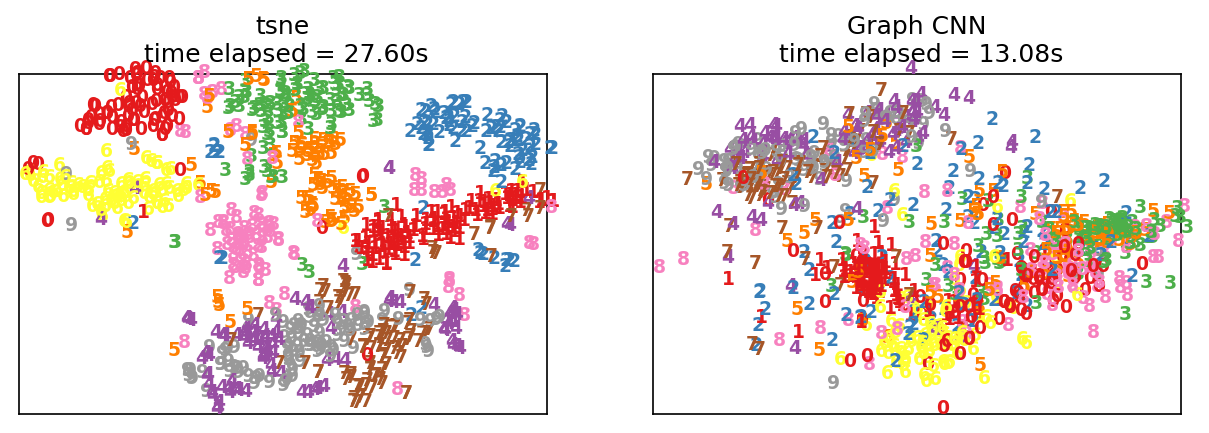

Experiment 3: Learning t-SNE

In the following experiment, I used a training set of 10,000 digits, with a mini batch size of 500. The network was trained on a total of 500 training epochs. The entire experiment lasted around seven hours. Below are the outputs for a test set of 1,000 digits (not very promising yet).